3 Public opinion on AI governance

3.1 Americans consider many AI governance challenges to be important; prioritize data privacy and preventing AI-enhanced cyber attacks, surveillance, and digital manipulation

We sought to understand how Americans prioritize policy issues associated with AI. Respondents were asked to consider five AI governance challenges, randomly selected from a test of 13 (see Appendix B for the text); the order these five were to each respondent was also randomized.

After considering each governance challenge, respondents were asked how likely they think the challenge will affect large numbers of people 1) in the U.S. and 2) around the world within 10 years.

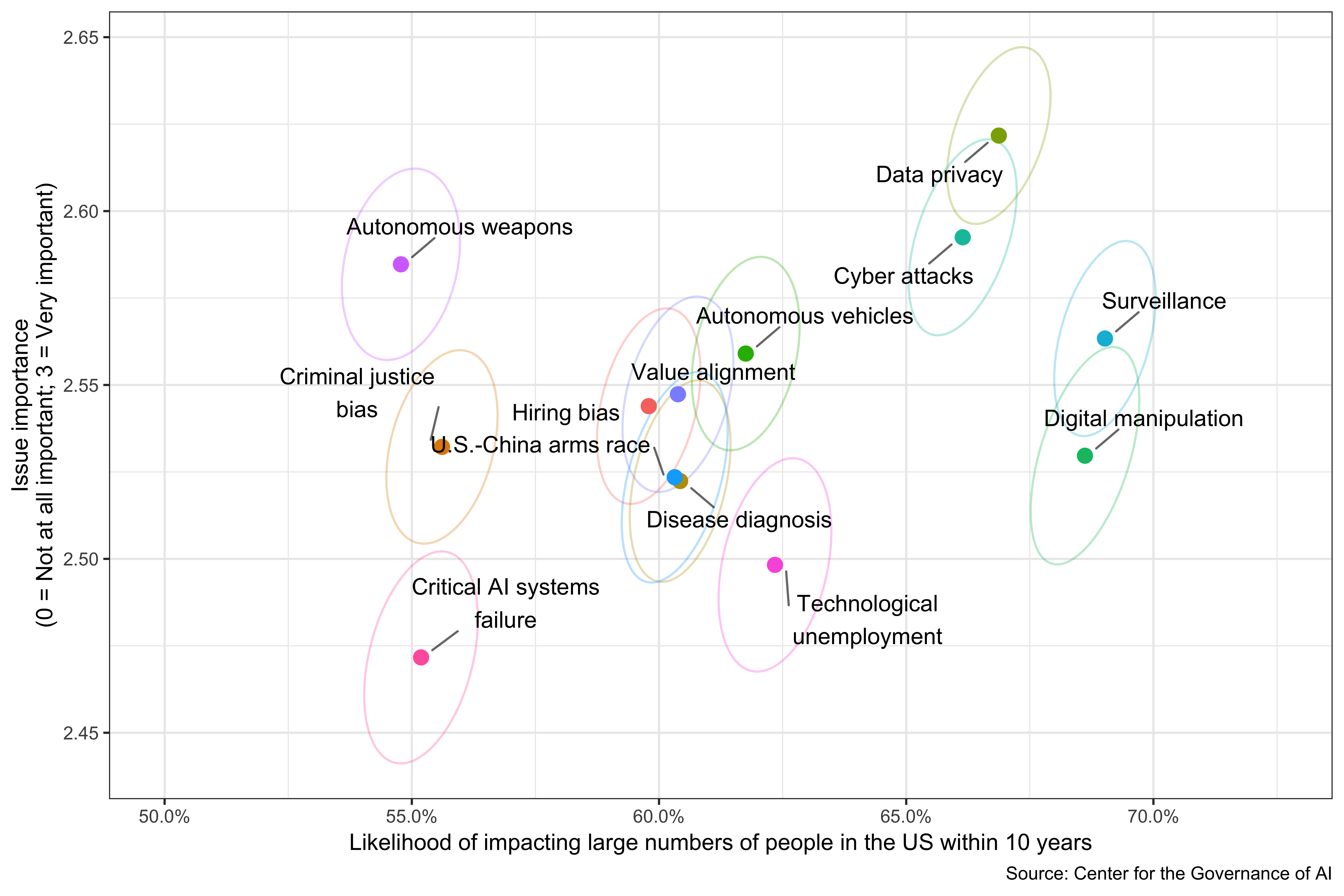

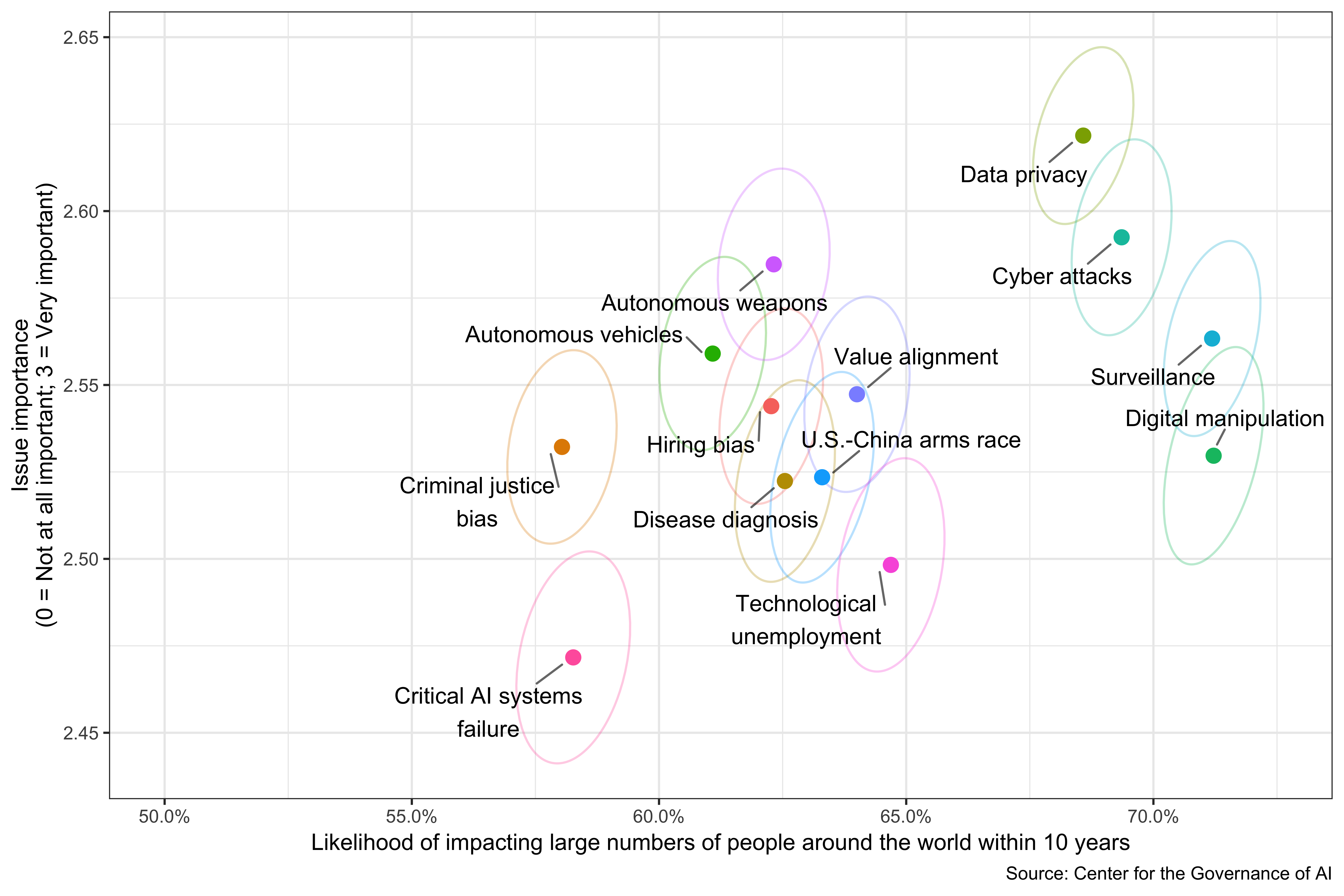

We use scatterplots to visualize our survey results. In Figure 3.1, the x-axis is the perceived likelihood of the problem happening to large numbers of people in the U.S. In Figure 3.2, the x-axis is the perceived likelihood of the problem happening to large numbers of people around the world. The y-axes on both Figure 3.1 and 3.2 represent respondents’ perceived issue importance, from 0 (not at all important) to 3 (very important). Each dot represents the mean perceived likelihood and issue importance, and the correspondent ellipse represents the 95% bivariate confidence region.

Americans consider all the AI governance challenges we present to be important: the mean perceived issues importance of each governance challenge is between “somewhat important” (2) and “very important” (3), though there is meaningful and discernible variation across items.

The AI governance challenges Americans think are most likely to impact large numbers of people, and are important for tech companies and governments to tackle, are found in the upper-right quadrant of the two plots. These issues include data privacy as well as AI-enhanced cyber attacks, surveillance, and digital manipulation. We note that the media have widely covered these issues during the time of the survey.

There are a second set of governance challenges that are perceived on average, as about 7% less likely, and marginally less important. These include autonomous vehicles, value alignment, bias in using AI for hiring, the U.S.-China arms race, disease diagnosis, and technological unemployment. Finally, the third set of challenges are perceived on average another 5% less likely, and about equally important, including criminal justice bias and critical AI systems failures.

We also note that Americans predict that all of the governance challenges mentioned in the survey, besides protecting data privacy and ensuring the safety of autonomous vehicles, are more likely to impact people around the world than to affect people in the U.S. While most of the statistically significant differences are substantively small, one difference stands out: Americans think that autonomous weapons are 7.6 percentage points more likely to impact people around the world than Americans. (See Appendix C for details of these additional analyses.)

We want to reflect on one result. “Value alignment” consists of an abstract description of alignment problem and a reference to what sounds like individual level harms: “while performing jobs [they could] unintentionally make decisions that go against the values of its human users, such as physically harming people.” “Critical AI systems failures,” by contrast, references military or critical infrastructure uses, and unintentional accidents leading to “10 percent or more of all humans to die.” The latter was weighted as less important than the former: we interpret this as a probability weighted assessment of importance, since presumably the latter, were it to happen, is much more important. We thus think the issue importance question should be interpreted in a way that down-weights low probability risks. This perspective also plausibly applies to the “impact” measure for our global risks analysis, which placed “harmful consequences of synthetic biology” and “failure to address climate change” as less impactful than most other risks.

Figure 3.1: Perceptions of AI governance challenges in the U.S.

Figure 3.2: Perceptions of AI governance challenges around the world

3.2 Americans who are younger, who have CS or engineering degrees express less concern about AI governance challenges

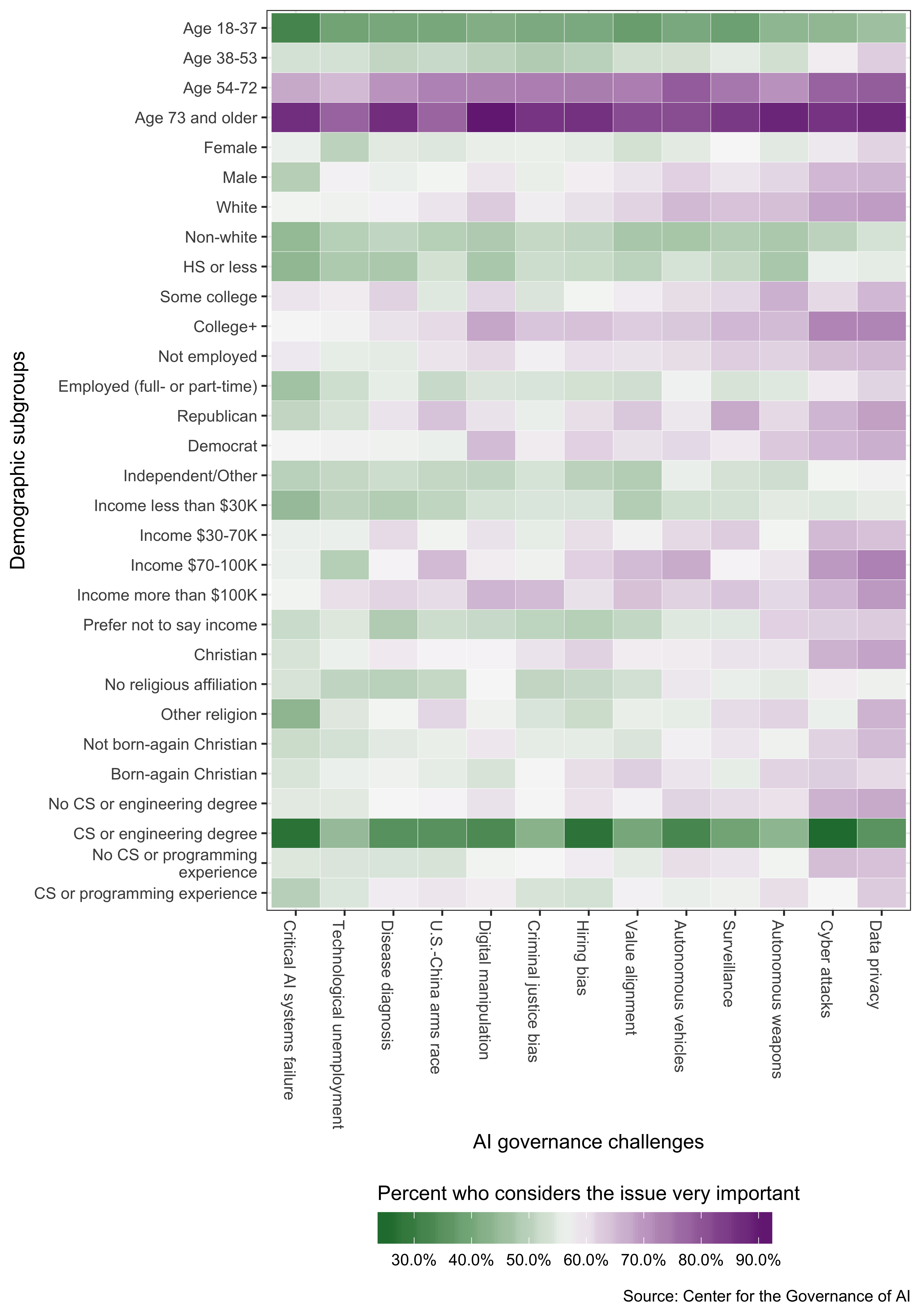

We performed further analysis by calculating the percentage of respondents in each subgroup who consider each governance challenge to be “very important” for governments and tech companies to manage. (See Appendix C for additional data visualizations.) In general, differences in responses are more salient across demographic subgroups than across governance challenges. In a linear multiple regression predicting perceived issue importance using demographic subgroups, governance challenges, and the interaction between the two, we find that the stronger predictors are demographic subgroup variables, including age group and having CS or programming experience.

Two highly visible patterns emerge from our data visualization. First, a higher percentage of older respondents, compared with younger respondents, consider nearly all AI governance challenges to be “very important.” As discussed previously, we find that older Americans, compared with younger Americans, are less supportive of developing AI. Our results here might explain this age gap: older Americans see each AI governance challenge as substantially more important than do younger Americans. Whereas 85% of Americans older than 73 consider each of these issues to be very important, only 40% of Americans younger than 38 do.

Second, those with CS or engineering degrees, compared with those who do not, rate all AI governance challenges as less important. This result could explain our previous finding that those with CS or engineering degrees tend to exhibit greater support for developing AI.8

Figure 3.3: AI governance challenges: issue importance by demographic subgroups

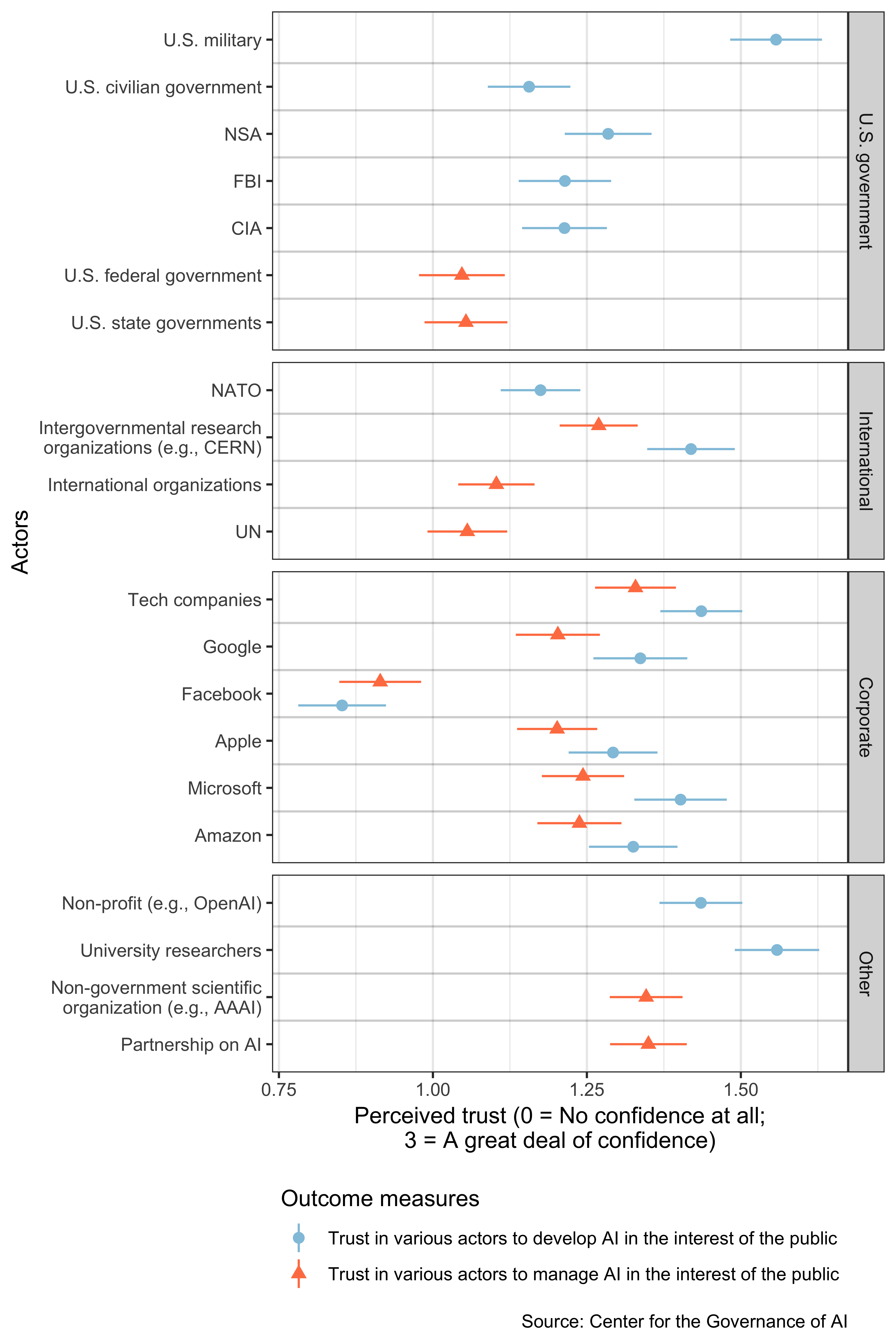

3.3 Americans place the most trust in the U.S. military and universities to build AI; trust tech companies and non-governmental organizations more than the government to manage the technology

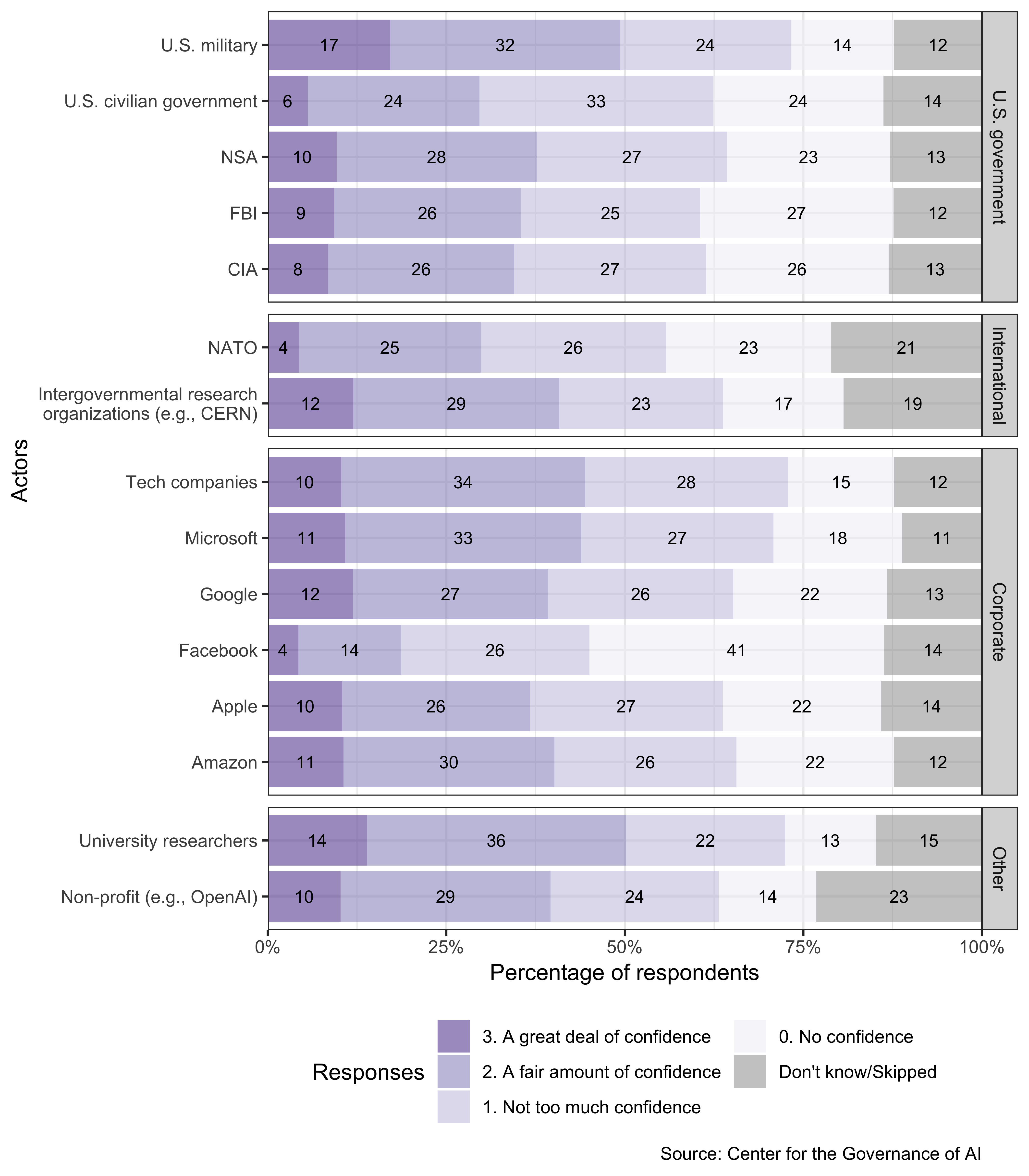

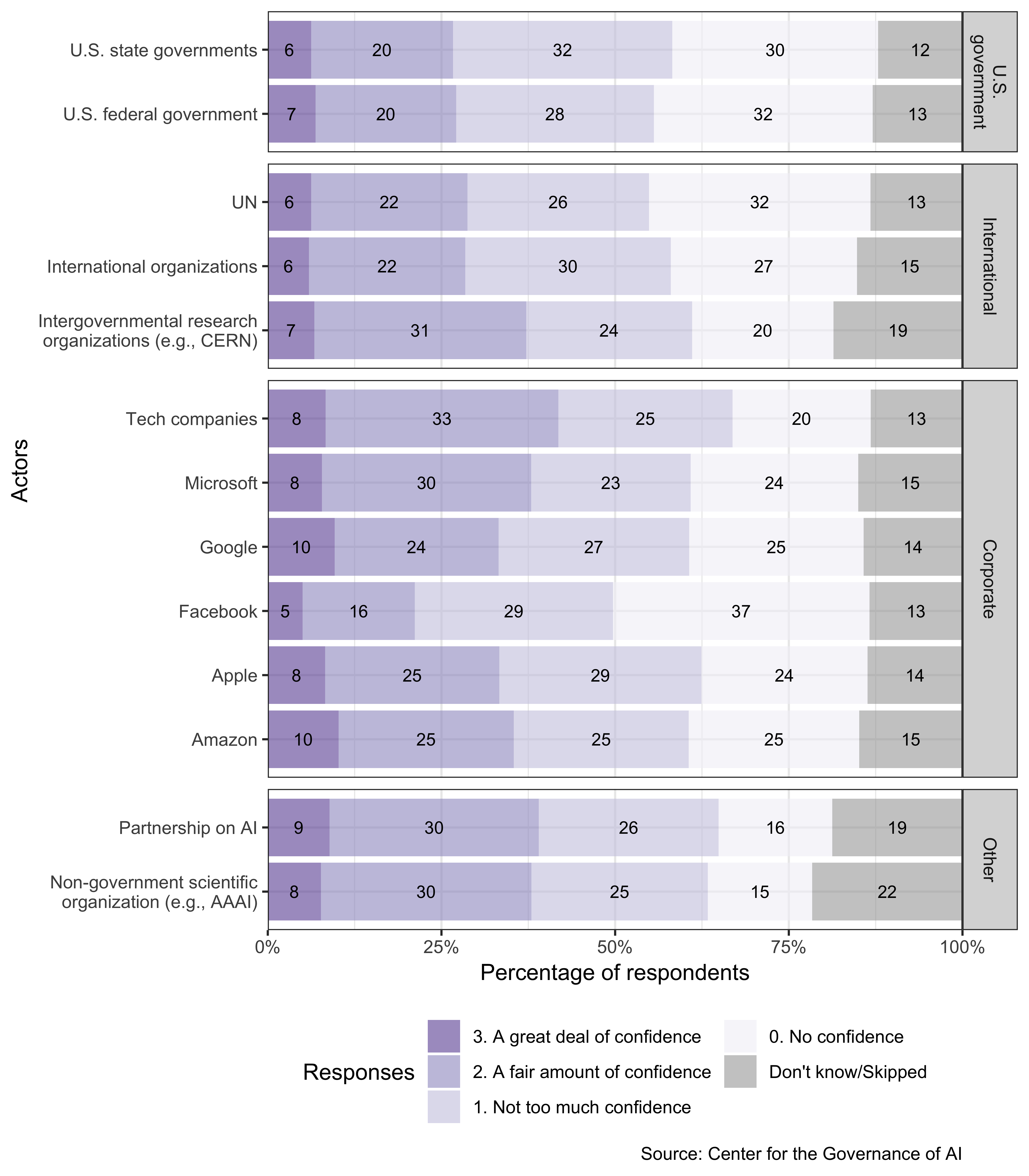

Respondents were asked how much confidence they have in various actors to develop AI. They were randomly assigned five actors out of 15 to evaluate. We provided a short description of actors that are not well-known to the public (e.g., NATO, CERN, and OpenAI).

Also, respondents were asked how much confidence, if any, they have in various actors to manage the development and use of AI in the best interests of the public. They were randomly assigned five out of 15 actors to evaluate. Again, we provided a short description of actors that are not well-known to the public (e.g., AAAI and Partnership on AI). Confidence was measured using the same four-point scale described above.9

Americans do not express great confidence in most actors to develop or to manage AI, as reported in Figures 3.4 and 3.5. A majority of Americans do not have a “great deal” or even a “fair amount” of confidence in any institution, except university researchers, to develop AI. Furthermore, Americans place greater trust in tech companies and non-governmental organizations (e.g., OpenAI) than in governments to manage the development and use of the technology.

University researchers and the U.S. military are the most trusted groups to develop AI: about half of Americans express a “great deal” or even a “fair amount” of confidence in them. Americans express slightly less confidence in tech companies, non-profit organizations (e.g., OpenAI), and American intelligence organizations. Nevertheless, opinions toward individual actors within each of these groups vary. For example, while 44% of Americans indicated they feel a “great deal” or even a “fair amount” of confidence in tech companies, they rate Facebook as the least trustworthy of all the actors. More than four in 10 indicate that they have no confidence in the company.10

The results on the public’s trust of various actors to manage the develop and use of AI provided are similar to the results discussed above. Again, a majority of Americans do not have a “great deal” or even a “fair amount” of confidence in any institution to manage AI. In general, the public expresses greater confidence in non-governmental organizations than in governmental ones. Indeed, 41% of Americans express a “great deal” or even a “fair amount” of confidence in “tech companies,” compared with 26% who feel that way about the U.S. federal government. But when presented with individual big tech companies, Americans indicate less trust in each than in the broader category of “tech companies.” Once again, Facebook stands out as an outlier: respondents give it a much lower rating than any other actor. Besides “tech companies,” the public places relatively high trust in intergovernmental research organizations (e.g., CERN), the Partnership on AI, and non-governmental scientific organizations (e.g., AAAI). Nevertheless, because the public is less familiar with these organizations, about one in five respondents give a “don’t know” response.

Mirroring our findings, recent survey research suggests that while Americans feel that AI should be regulated, they are unsure who the regulators should be. When asked who “should decide how AI systems are designed and deployed,” half of Americans indicated they do not know or refused to answer (West 2018a). Our survey results seem to reflect Americans’ general attitudes toward public institutions. According to a 2016 Pew Research Center survey, an overwhelming majority of Americans have “a great deal” or “a fair amount” of confidence in the U.S. military and scientists to act in the best interest of the public. In contrast, public confidence in elected officials is much lower: 73% indicated that they have “not too much” or “no confidence” (Funk 2017). Less than one-third of Americans thought that tech companies do what’s right “most of the time” or “just about always”; moreover, more than half think that tech companies have too much power and influence in the U.S. economy (Smith 2018). Nevertheless, Americans’ attitude toward tech companies is not monolithic but varies by company. For instance, our research findings reflect the results from a 2018 survey, which reported that a higher percentage of Americans trusted Apple, Google, Amazon, Microsoft, and Yahoo to protect user information than trust Facebook to do so (Ipsos and Reuters 2018).

Figure 3.4: Trust in various actors to develop AI: distribution of responses

Figure 3.5: Trust in various actors to manage AI: distribution of responses

Figure 3.6: Trust in various actors to develop and manage AI in the interest of the public

References

Funk, Cary. 2017. “Real Numbers: Mixed Messages About Public Trust in Science.” Issues in Science and Technology 34 (1). https://perma.cc/UF9P-WSRL.

Ipsos and Reuters. 2018. “Facebook Poll 3.23.18.” Survey report. Ipsos; Reuters. https://perma.cc/QGH5-S3KE.

Smith, Aaron. 2018. “Public Attitudes Toward Technology Companies.” Survey report. Pew Research Center. https://perma.cc/KSN6-6FRW.

West, Darrell M. 2018a. “Brookings Survey Finds Divided Views on Artificial Intelligence for Warfare, but Support Rises If Adversaries Are Developing It.” Survey report. Brookings Institution. https://perma.cc/3NJV-5GV4.

In Table C.15, we report the results of a saturated linear model using demographic variables, governance challenges, and the interaction between these two types of variables to predict perceived issue importance. We find that those who are 54-72 or 73 and older, relative to those who are below 38, view the governance issues as more important (two-sided \(p\)-value < 0.001 for both comparisons). Furthermore, we find that those who have CS or engineering degrees, relative to those who do not, view the governance challenges as less important (two-sided \(p\)-value < 0.001).↩

The two sets of 15 actors differed slightly because for some actors it seemed inappropriate to ask one or the other question. See Appendix B for the exact wording of the questions and descriptions of the actors.↩

Our survey was conducted between June 6 and 14, 2018, shortly after the fallout of the Facebook/Cambridge Analytica scandal. On April 10-11, 2018, Facebook CEO Mark Zuckerberg testified before the U.S. Congress regarding the Cambridge Analytica data leak. On May 2, 2018, Cambridge Analytica announced its shutdown. Nevertheless, Americans’ distrust of the company existed before the Facebook/Cambridge Analytica scandal. In a pilot survey that we conducted on Mechanical Turk during July 13-14, 2017, respondents indicated a substantially lower level of confidence in Facebook, compared with other actors, to develop and regulate AI.↩